I have the 3007WFP-HC, and run it on the minimum brightness setting to avoid eye strain. Another benefit of doing this is power usage. On the lowest brightness setting, I am typically pulling 63w at the wall vs. 93w for the highest (default) setting.

Mac OS X has a built-in colour calibrator which uses a rather different technique, seen in the screen shots here:

http://www.computer-darkroom.com/colorsync-display/colorsync_2.htm

I hope it’s clear to readers that the calibration images above should only be considered for demonstration purposes. Readers should calibrate their display using software made for the purpose, and not by viewing the Vista screen captures displayed in this web page.

Because of the display characteristics of the images, different web browsers, operating systems, OS settings, and hardware displays, they will be rendered differently on different systems. Calibration software either overrides the OS’s colour rendering, or takes it into consideration when displaying the calibration image.

Do these image files have embedded colour profiles? Your OS or browser may, or may not assume an sRGB colour profile for non-tagged images. Your graphics software, e.g. Photoshop, uses a different colour-display engine, which makes different assumptions, and has many options for rendering intent. Does Vista’s screenshot function saves the raw bits from the calibration video, or as altered by the OS’s display calibration function?

The difference was totally night and day, DVI is just far superior especially when it comes to crisply rendering high contrast edges and text.

DVI is the way to go, however, the quality of the analog VGA conversion in most LCDs has improved dramatically in the last few years. They’re still inferior to a pure DVI connection, but the difference is not quite as extreme as it used to be.

As I mentioned in my post, some monitors disable almost all their calibration controls when in digital mode. My circa-2004 SyncMaster 213T is like that. When connected via DVI, I can only adjust brightness. Newer Samsung LCDs don’t suffer from this defect, fortunately…

Readers should calibrate their display using software made for the purpose,

It’s true that calibration is a question of how far down the rabbit hole you want to go, but the three images I posted are a reasonable way to get started. Most people use their displays the way they came from the factory; any kind of basic eyeball calibration is better than that.

There are three flavors of DVI:

DVI-A: analog

DVI-D: digital

DVI_I: both

It seems DVI-I cables are pretty standard, since they support analog or digital (and can have a VGA adapter put on one end).

If you want to make sure you’re in digital mode, most monitors will usually tell you if you go to their info screen. If you REALLY want to be sure, get a hold of a DVI-D cable and use that.

any kind of basic eyeball calibration is better than that

Perhaps something is better than nothing, but is there no free colour calibration for pre-Vista versions of Windows?

Even a simple ramp of controlled grey patches might be an improvement over the photos of that sexy guy. In the following image you should see every tone, and just barely make out the alternating squares in the top strip (from a tutorial at http://www.digitalmasters.com.au/Monitor_Calibration.html):

![]()

I also wonder if the Windows Media Center makes the same gamma curve and other assumptions for TV display as one would for general computer use.

If you highlight all solid-black pixels in the first, you will find that half of the X is indeed visible, over a mostly-pure black background—the background is so dark that the X is invisible (in fact most of the background is solid black #000). Similarly, in the white-shirt photo, about half of the shirt pixels have shifted to solid blocks of white #FFF. Although there are buttons and wrinkles visible, you can play around with colour controls all you want, but the flat areas will remain featureless. These are not good examples of continuous-tone photos. I think something may have happened in capturing the screen which bottomed out the dark tones and blew out the whites.

I hope it’s ok to use these tips to calibrate CRT display. Thank a lot!

Photobox (a popular online photo lab in the UK) has a very sensible approach to simplified calibration.

They supply a “calibration print” with your first order and provide the same image on their website. Adjust your monitor so that the image on screen is as close as possible to the print and you have an instant “good enough” calibration.

See http://www.photobox.co.uk/quality.html for the image.

“it was a tweakers paradise”

I about spat out my food when I read that. Pure comedy. I love it.

Preachin to the choir.

The brightness theory is, the more expensive the LCD monitor is, the dimmer you can get. And photographers recommends view photos using dimmest setting to see the most out of a photos.

Since most LCD back panel are flourescent panel, it’s difficult to simply tune down the power to make it looks dimmer. If you power up a flourescent panel with lower power, it will just flickers and becomes very unstable (try a dimmer switch on a flourscent lamp for a few seconds and you will know. But never try it for too long.)

AFAIK, EIZO monitor is particular good in this area. I bought one few years ago and another one last year as a dual monitor setup (same model, just before they discontinued it.) I know it’s expensive but my eyes are even more expensive too. One of them said 4,000 hours and another one is like 1,000 hours now. And I can’t spot any differences between them.

Hi,

Output of MCE goes over VMR9 which boost the blacklevel a little higher then “really” black. So if you setup your black level with MCE you loose some grey-resolution in Games/Desktop-Apps.

So better set Black Level via any greyscale-test in a “non video” application (I use CRTAT) and after that adjust black for mce via the video-color correction in NVidia-Video-Settings. It’s a little annoying becaus you have to switch between MCE and NCVidia Panel a lot to get it right, but I know no other way.

- Oliver

Hi,

Output of MCE goes over VMR9 which boost the blacklevel a little higher then “really” black. So if you setup your black level with MCE you loose some grey-resolution in Games/Desktop-Apps.

So better set Black Level via any greyscale-test in a “non video” application (I use CRTAT) and after that adjust black for mce via the video-color correction in NVidia-Video-Settings. It’s a little annoying becaus you have to switch between MCE and NCVidia Panel a lot to get it right, but I know no other way.

- Oliver

I run dual display with two LCD panels. The video card I have has one DVI output and one VGA output, so one screen runs over DVI and the other over VGA. There is no discernable image quality between the two. Provided you are running at the native resolution of the LCD display, the screen should be able to determine the pixel clock and give you a virtually identical signal. Good quality VGA cables would make a difference, but most computer displays have very short runs (6 feet or less) where you are unlikely to encounter analog artifacts such as ghosting or ringing.

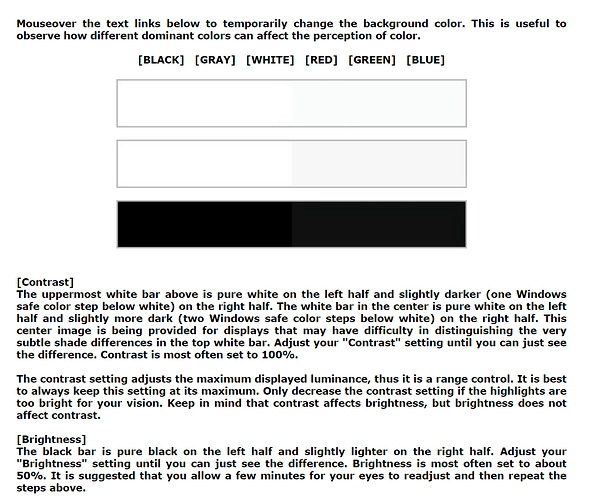

Neat demo of how background colors can affect your perception of colors-- mouse over the various colors in brackets [BLACK], [GRAY] etc to see in action.

John Radke,

You have two crappy monitors. There is a definite difference between the two connection types on a good LCD.

I tend to run my MacBook at one notch of brightness above having the backlight off. For looking at photos or watching video sometimes I’ll turn the brightness up, but for any amount of reading text it burns the eyes out of me, although I do have to turn it down slowly over a couple of minutes to let my eyes get used to it being darker. My 21" Trinitron at work is at about 1/4 brightness and 1/3 contrast and the highest colour temperature I could set (9300K with tweaks) so that whites aren’t yellow.

For Windows 11 and HDR displays, be sure to use the official HDR calibration tool from Microsoft.