@codinghorror Why I don’t share your enthusiasm about HTTPS.

@codinghorror So when do you start on the HTTP/2 bandwagon. I’m partially there on mine but it does involve “coding” much more than Letsencrypt which did most of the things for me (incorrectly I may add) but it wasn’t long before I figured out what they were actually doing.

As for cost, there’s also StartSSL which is free and I was using it on my blog https://trajano.net for the longest time and just forget to renew it after a year.

Let’s encrypt once set up just does everything through a cron job so I’m happier.

You may just tell your DNS resolver to use your www machine as the actual one. I actually did that on mine and got rid of the pesky www Well I was sort of forced into it when I was playing around with HSTS and everything (and I mean everything) under trajano.net required HTTPS.

I had to fix my github pages to use HTTPS which wasn’t really possible because it was a custom domain and they don’t have my private key and cert for the server. So instead I just took over the URL and did a reverse proxy to github pages.

There is another way of doing it, but I didn’t bother which was to use cloudflare which provides a free SSL proxying for your site. However, it required changing the DNS resolver to be managed by them which is something I wasn’t keen on venturing on.

What we’d likely have is Chrome/Firefox/Safari indicating that the certificate is just “DV” domain validated. Rather than a high assurance one.

I still like having HTTPS aside from being a technical challenge to myself, learning how letsencypt works and it being a requirement for HTTP/2 on some earlier servers/browsers.

I was more keen on the HTTP2 server push stuff when I did the change on my system.

It basically requires people to be on Ubuntu 16.04 Server, other than that it just works. Which reminds me, I need to upgrade this droplet..

edit: OK, this droplet was upgraded to Ubuntu 16.04, let’s see..

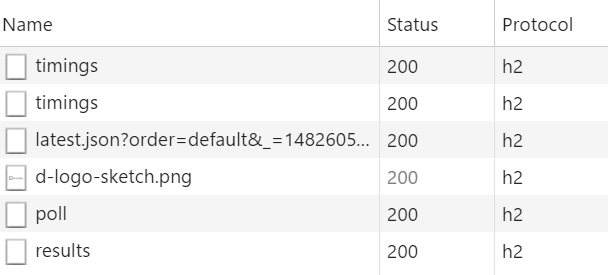

h2, looks good ![]()

What I did on my server since I am not as content heavy as you are is temporarily add the “stretch” repository to apt-get https://trajano.net/2016/11/enabling-server-raspberry/ not something I would recommend for the faint of heart but it was a fun and successful experiment.

h2 will be better once you get the hang of server side push.

I did a hack (I haven’t blogged about it yet though) on my wordpress install such that the “cache” engine will generate a .headers file that contains the text for the Link header

<If "-f '%{REQUEST_FILENAME}.headers'">

Header add Link expr=%{file:%{REQUEST_FILENAME}.headers}

</If>

The headers file look like this (warning long line)

<https://www.google-analytics.com/analytics.js>; rel=preload; as=script,<https://trajano.net/wp-content/cache/autoptimize/js/autoptimize_c63d4311ffb926fb21eb61b2166958d4.js>; rel=preload; as=script,<https://trajano.net/wp-content/cache/autoptimize/css/autoptimize_d609d62f3b62246893f99db63ac98577.css>; rel=preload; as=style,<https://trajano.net/wp-content/uploads/2016/08/logo.png>; rel=preload; as=image,<https://trajano.net/wp-content/uploads/2016/08/logo.png>; rel=preload; as=image,<https://trajano.net/wp-content/uploads/2016/08/logo.png>; rel=preload; as=image,<https://www.trajano.net/wp-content/uploads/2014/01/logo-blog-twentyfourteen1.png>; rel=preload; as=image,<https://trajano.net/wp-content/uploads/2016/11/http2-server-push-2.png>; rel=preload; as=image,<https://trajano.net/wp-content/uploads/2016/11/vsto-672x372.png>; rel=preload; as=image,<https://trajano.net/wp-content/uploads/2016/11/32292df-500x372.jpg>; rel=preload; as=image,<https://trajano.net/wp-content/themes/twentyfourteen/css/ie.css?ver=20131205>; rel=preload; as=styl

You don’t have to put relative URLs you can put in full URLs even to those outside your site so you can preload from a CDN before your server gets loaded

It’s a simple hack but you can preload the things shave off a few mili seconds to render

https://www.webpagetest.org/result/161224_NS_T9M/1/details/#waterfall_view_step1

I think HTTPS is a great very short sighted and a very desparate solution.

I see it a lot like advocating to send steel boxes instead of paper envelopes because the post office, the couriers and the local gang started looking through everybody’s mail.

The URL is always known - so everybody in the chain knows what you’re doing - saying that when people the pages over https the evil people are not able to see what they read is just not true - they would know where you went and what the article contains so they know what you read.

The argument about altering the content is very very important and I worry about this a lot - this can be solved with page signatures or some kind of protocol for verification if the content is correct (as you do with checksum for downloaded files) - a trusted checksum provider where publishers can register a content of a page and my browser can check if the page content is correct (or part of the page…)

Different argument, DNS queries are also known. Using a VPN fixes this if it is a concern.

You will be reinventing https .. poorly.

I recently typed a how-to guide on setting up letsencrypt certs on IIS. It’s pretty easy and the tooling is fantastic!

With Free TLS Certificates And Fantastic Tooling, There Are No Longer Any Excuses Not To Use HTTPS

From the article “browsers, please don’t kill HTTP”, linked above by @JosephErnest as “Why I don’t share your enthusiasm”:

cash for SSL certificates

I have shared hosting. I paid $15 to a Comodo reseller for a 3-year DV certificate, which is about an order of magnitude cheaper than the domain plus hosting.

Even with the free Let’s Encrypt initiative, maintaining HTTPS requires huge technicity

Some shared hosts, such as DreamHost, have a button to handle the “huge technicity” for you.

StartSSL

This CA has been distrusted because of backdating.

What we’d likely have is Chrome/Firefox/Safari indicating that the certificate is just “DV” domain validated.

The Comodo Dragon browser, a Chrome clone distributed by a CA, already has such a warning for DV certificates, warning the user that “the organization operating [this site] may not have undergone trusted third-party validation that it is a legitimate business.” (Screenshot) But I don’t see “DV may be typosquatting!!!11” warnings spreading to other browsers for two reasons. First, users would just turn it off because even Facebook has been known to use DV certificates. Second, both Mozilla and Google are sponsors of Let’s Encrypt, a not-for-profit DV CA.

The URL is always known

Only the origin (scheme, hostname, and port) of an HTTPS connection are transmitted in cleartext, not anything about the path or query parameters other than their length. The port is transmitted in the TCP header, the scheme is narrowed down by the presence of a ClientHello, and the cleartext hostname is in the Server Name Indication field of the ClientHello.

The argument about altering the content is very very important and I worry about this a lot - this can be solved with page signatures

In theory, TLS with a signature-only cipher suite would solve this, but for various reasons, browser makers don’t want to implement this.

I played around a little bit with HTTPS (and HTTP/2), and in practice it’s not as secure (or fast with HTTP/2) as I tough it would be. The problem is that proxies (which are quite common) break the end-to-end encryption.

One example is the Superfish adware that Lenovo pre-installed on their laptops in 2014:

In short, the adware program intercepts all internet traffic (including HTTPS), and injects its own advertisement in web pages. It then re-encrypts the page with its own certificate, so users will still see it’s a valid HTTPS connection. The reason it’s valid is because the Lenovo certificate is installed in the Windows root certificate store.

As bad as this sounds, this is also what anti-virus programs do when they have some “web protection” feature enabled. In order to scan for suspicious content, they also need to intercept internet traffic (including HTTPS). After which they re-encrypt with their own certificate, and send it to the browser. On my work PC here, I have ESET NOD32 installed. When I go to an HTTPS site and inspect the certificate, I will see it’s signed with an ESET root certificate*, and not with the certificate from the website itself. Thus breaking the end-to-end encryption you thought you had.

At the very least, this negates the performance gains you have with HTTP/2.

(* ESET makes exceptions for many common HTTPS sites, like Google, Facebook, etc.)

I’ve been using an extension from EFF called HTTPS Everywhere for a while now to force some websites into HTTPS.

The good part is most of the other sane devices would not have these protections or at least not to the asinine level that Lenovo has. HTTP/2 has some pretty good performance gains when you combine with HTTP/2 push. But we’re talking about saving a few hundred milliseconds not something major.

It’s more for the big scale applications where they would have time and money to spend the effort to improve the performance. As for my part I did get HTTP/2 push working on my web site and it does save a little bit of time because the requests can start as soon as possible.

Although at present it does not help much on my site because the HTML content is very tiny that by the time it finishes processing the HTTP header block the content would have been downloaded, parsed and rendered already.

https://www.webpagetest.org/result/170105_SY_1EMQ/1/details/#waterfall_view_step1

Now I could make it so that it could show more speed by embedding the CSS into the HTML which will also remove the FUOC and critical CSS issues. But that would only benefit HTTP/2 clients.

The good part is

- Safari on iOS and likely on MacOS

- Microsoft Edge

- Firefox

- Chrome

… all support HTTP2 on their current versions.

update

Actually after posting the above message I wanted to check what the result would be like and it is as I had expected

https://www.webpagetest.org/result/170105_SC_1J4K/1/details/#waterfall_view_step1

Without the “styles.css” it will take chrome a bit more processing time. Since it took a little more processing time to render the local page you can see that the other components are actually starting to load up sooner than later because the other components are being pushed in by chrome already.

The net result is a slight 500ms speed increase to start rendering. But of course that could just be plain network latency. There is a reduction of 200 bytes though.

"The performance penalty of HTTPS is gone, " – and then takes us to a link that compares three versions of SSL encrypted connections: SPDY, HTTPS, and HTTP/2. Be that as it may, the issue for me is Internet Of Things. Things that support SSL encryption and authentication require much more memory, and, with few exceptions, high speed 32 bit processors. HTTP 2.0 requires much less memory, and works ok on 50KHz 8 bit processors.

Thank you very much for this topic, as you say in the article “That is, until Let’s Encrypt arrived on the scene.”, we believe that is correct. as we always do.