I think that you are spot on with this post. Computers are jerks to humans. Also, I was surprised that a ~500mhz processor was about grand master level in chess. That makes AlphaGo more feasible in my mind, but also impressive. The number of GPU’s that it used, as you described it, was an insane amount. Humans seems really small when compared to how well computers can destroy us. The fact that AlphaGo lost also says something about humans not being able to create a perfect machine. Overall, I found this information really interesting.

These stunt matches are a bit unfair, because the computers have trained beforehand on the games of champions, but the human champion has never played against the computer. In both Kasparov and Lee, the human player was confounded by moves that no human had previously considered. Humans will train against computers, ushering in a golden age of human Go players.

AlphaGo seems to be better than Deep Mind. Deep Mind wasn’t really a man-vs-machine match: The engineers were tinkering with the program during the match, and promptly destroyed the machine at the end. Perhaps they were afraid of a rematch. AlphaGo is not available to the general public, but it’s built out of standard machine learning components. Perhaps patented, though.

we know that computers will continue to beat us at virtually every game we play

I think that’s true for a subset of games. I’m reluctant to think that a computer would win a game of Battlestar Galactica: The Board Game against my group.

For starters, he would be the very best to go to prison. He’s obviously a toaster and no ammount of good deeds would convince us otherwise… He could be in the winning team, granted, but he would be a burden. The team would win despite him, not thanks to him.

In conjunction with your newer post about coding games, and one of the comments on there - Advent of Code:

Many of the “part 2” problems on adventofcode (and some of the part 1) revolved around this change of how we program to solve problems. In other words, part 1 might be “find the best combination of weapons and armor for an RPG character.” And you could solve it with brute force. But part 2 would be “what’s the best order of spells to cast to survive a boss battle with the least amount of mana used?” And the combinations were so numerous that brute force was extremely likely to fail for most programming languages/machines, so you’d have to go at things a different way than you might expect. Maybe still a sort of brute force, but with randomization and aborting dead-end attempts to make things efficient.

This sounds like a knapsack problem to me:

Indeed. I didn’t spend much time identifying the type of problem because I’m not that sophisticated. Somehow I managed to solve them all with JavaScript anyway (occasionally leaning on help from fellow Redditors…) But my point was just that the two posts together reminded me of attacking those challenges, seeing how the brute force methods would fail, and then finding some way to let the program learn along the way that certain pathways were duds, so to keep trying something different. About 1/1,000,000th the level of learning that goes into neural network algorithms, but it just reminded me enough of it to comment about it

You cost per floating point operation chart is incorrect on the 1961 line. That should be $8,300,000,000,000 ($8.3 trilllion), not just $8,300,000,000 ($8.3 billion).

Jeff, not sure what you mean by “Pac Man is beyond the capabilities of Deep Mind”? Teaching a computer to play Pac Man is used as the end-of-semester test for college students at Berkley and is playable with some just some basic Q-Learning techniques: (https://youtu.be/jUoZg513cdE?t=40m54s). It’s also featured in the OpenAI playground, that anyone can code against.

I think you misunderstand. Deep Mind looked only at pixels, that is, it would have to figure out the game and its strategies from first principles based on the patterns of pixels on the screen.

If you give a computer a maze structure, and treat it like a maze, sure. That’s easy. But it is a far cry from saying “here’s a bunch of pixels, you figure out what they do.”

https://universe.openai.com/ is aimed exactly at that problem

There is movement on that

Details on the difficult games here: Montezuma’s Revenge, Pitfall, Solaris, and Skiing:

Here’s a neat summary of it learning to play breakout

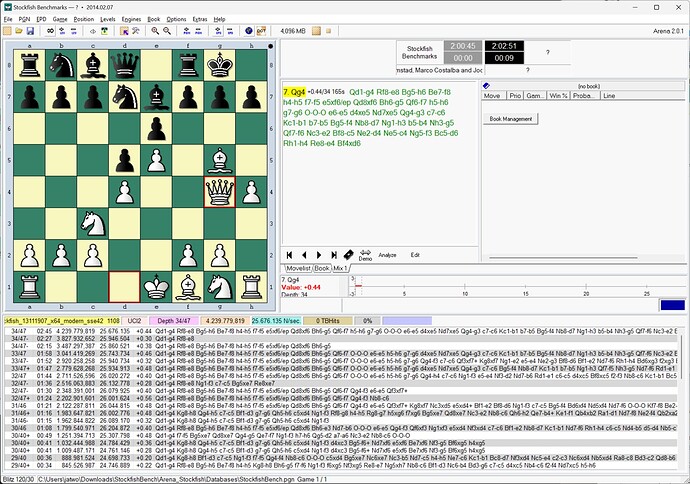

Also, with regards to Fritz 9 and brute force benchmarks.. 4 core, 17m, 8 core 28m, I just tested my fancy 16 core 5950x and got 38m. Diminishing returns with cores.

And powering it all.. lots and lots of GPUs ![]()

But no need to pay for all this, you can rent them in the cloud per minute.

Current results with 16 core AMD 5950x: 25,676 kN/s

Per the benchmark results, a bit higher than the old 24 core CPUs at 23,020 kN/s.